Teaching Current Directions

Teaching Current Directions in Psychological Science

Aimed at integrating cutting-edge psychological science into the classroom, Teaching Current Directions in Psychological Science offers advice and how-to guidance about teaching a particular area of research or topic in psychological science that has been the focus of an article in the APS journal Current Directions in Psychological Science. Current Directions is a peer-reviewed bimonthly journal featuring reviews by leading experts covering all of scientific psychology and its applications and allowing readers to stay apprised of important developments across subfields beyond their areas of expertise. Its articles are written to be accessible to nonexperts, making them ideally suited for use in the classroom.

Visit the column for supplementary components, including classroom activities and demonstrations.

Visit David G. Myers at his blog “Talk Psych”. Similar to the APS Observer column, the mission of his blog is to provide weekly updates on psychological science. Myers and DeWall also coauthor a suite of introductory psychology textbooks, including Psychology (11th Ed.), Exploring Psychology (10th Ed.), and Psychology in Everyday Life (4th Ed.).

Conservatives, Liberals, and the Distrust of Science

When Fiction Becomes Fact

By Cindi May and Gil Einstein

Whether you are reading your Twitter feed, a best-selling novel, or a newspaper, you are at risk: Historical and informational errors in our reading materials are more common than you might think. And if you read such errors, you are likely to report them as fact at some point in the future.

This repetition of misinformation occurs because people often encode and remember material they read without engaging in careful analysis or evaluation, and thus incorporate that information into their general knowledge (Andrews & Rapp, 2014; Singer, 2013). The fact that we remember, rely on, and repeat inaccurate information is well documented (e.g., Fazio, Barber, Rajaram, Ornstein, & Marsh, 2013; Jacovina, Hinze, & Rapp, 2014; Marsh, Meade, & Roediger, 2003). It can occur even for information that we know is wrong (Rapp & Braasch, 2014).

In many respects this tendency is a little troubling, as misinformation may influence our judgment and decision-making — perhaps even affecting such important choices as our vote in a presidential election. APS Fellow David N. Rapp (2016) offers important insights into why we make these mistakes and outlines interventions that have — and have not — worked to reduce our reliance on inaccurate information that we have read.

To give students a sense of the pervasiveness of the issue, first ask them to discuss in small groups instances in which they fell prey to inaccurate information, perhaps retweeting or sharing the “news” on social media. Then ask them, while working in those groups, to judge which of the following pieces of information are fact or fiction:

- Russia has a larger surface area than Pluto.

- On Jupiter and Saturn, it rains diamonds.

- The Ebola virus has been shown to be airborne in some cases.

- An 8-year-old girl was killed in the Boston Marathon bombing.

- After the November 2015 terrorist attacks in Paris, the lights on the Eiffel Tower were dimmed as a sign of solidarity with the victims.

- During Hurricane Sandy, the Statue of Liberty was threatened by violent winds and high waves.

- Oxford University is older than the Aztec empire.

- An octopus has three hearts.

The first two and last two items are true. The remaining items are all examples of misinformation that went viral through social media, and students may thus be likely to report them as true despite their falsity.

Relaying misinformation is obviously embarrassing. In some cases, it can damage our personal or professional lives. Ask students to suggest ways to protect against falsehoods we encounter when we read. The following techniques are likely to be suggested, but research demonstrates that none of them are effective:

- Warn people that the material they are reading contains errors. (People warned before reading errors show little reduction in reliance on those inaccuracies; Eslick, Fazio, & Marsh, 2011.)

- Ask people to retrieve accurate information (e.g., have them name the capital of Russia and most will correctly report Moscow) before exposing them to misinformation (e.g., that St. Petersburg is the capital of Russia). (People who retrieve correct information either 2 weeks prior to or immediately before reading misinformation continue to rely on falsehoods; Fazio et al., 2013; Rapp, 2008.)

- Present materials more slowly and reduce the complexity of the information. (Even with easier texts and more time, people persist in their assimilation of false information; Fazio & Marsh, 2008.)

- Test people long after exposure to the misinformation so that it fades from memory. (Delayed testing actually can increase reliance on errors; Appel & Richter, 2007.)

Why do these errors persist? Rapp argues that the human mind is designed for efficiency so that people are quick to perceive, recognize, respond to, and remember stimuli. For example, we tend to rely on recent memories because they are easily accessed (Benjamin, Bjork, & Schwartz, 1998), and we tend not to tag the reliability or quality of sources when reading because that requires time and effort (Sparks & Rapp, 2011). Both of these tendencies improve efficiency, and although that efficiency generally makes us more effective processors when information is accurate, it does leave us vulnerable to misinformation.

How can we mitigate these errors? Rapp and colleagues note that highly implausible errors are less likely to be adopted as true (Hinze, Slaten, Horton, Jenkins, & Rapp, 2014). Furthermore, if misinformation comes from a source known to be consistently unreliable, people are less likely to use it (Andrews & Rapp, 2014). Beyond these observations, Rapp and colleagues have developed two techniques for reducing reliance on misinformation, each informed by an understanding of the way cognitive processing works. See if your students can use what they know about human memory as well as Rapp’s theory about the source of these errors to generate these effective strategies:

- Force people to tag information as incorrect. Asking people to correct inaccuracies in pen as they read reduces the impact of the misinformation (Rapp, Hinze, Kohlhepp, & Ryskin, 2014).

- Present false information in a context that is clearly separate from reality. The fantasy context prevents incorporation of misinformation into general knowledge (e.g., if a historical error occurs in a fantasy novel versus realistic fiction; Rapp, Hinze, Slaten, & Horten, 2014).

This research offers two interesting “step back” lessons. First, human behavior is not intuitive; we need science to understand, predict, and control it. Many of the “obvious” solutions for combatting false information (e.g., warning people that material contains misinformation) do not work. Second, the identification of effective solutions not only is important for reducing the perpetuation of misinformation but also advances our understanding of how the mind works.

Conservatives, Liberals, and the Distrust of Science

By David G. Myers

On many issues, a gulf exists between what the public believes and what scientists have concluded. Is it safe to eat genetically modified (GM) foods? Yes, say 37% of US adults and 88% of 3,447 American Association for the Advancement of Science (AAAS) members (both surveyed by the Pew Research Center; Funk & Rainie, 2015).

Is climate change “mostly due to human activity”? Yes, say 50% of US adults and 87% of AAAS members, not to mention 97% of climate experts (Cook et al., 2016).

The public-opinion-versus-science divergence continues: Have humans evolved over time? Are childhood vaccines such as the measles, mumps, and rubella (MMR) vaccine safe? Is it safe to eat food grown with pesticides? (Yes, yes, and yes, say most scientists, but no, no, and no, says much of the public.)

What gives? What drives the widespread rejection of scientific findings?

In some instances, note Stephan Lewandowsky and Klaus Oberauer (2016), the public is simply misinformed. A fraudulent but widely publicized report of a link between the MMR vaccine and autism led to a drop in MMR vaccinations and an increase in measles and mumps (Poland & Jacobson, 2011). People may doubt climate change based on the weather they are currently experiencing plus a lack of education about greenhouse gases, rising global temperatures, retreating glaciers, increasing extreme weather, and rising seas — albeit all with imperceptible gradualness. (Some misinformation, Lewandowsky and Oberauer remind us, is funded by corporate interests, as when the tobacco industry worked to counter smoking research.)

In many other instances, contend Lewandowsky and Oberauer, “scientific findings are rejected … because the science is in conflict with people’s worldviews, or political or religious opinions” (p. 217). A libertarian who prizes the unregulated free market will be motivated to discount evidence that government regulations serve the common good — that gun control saves lives, that livable minimum wages and social security support human flourishing, that future generations need climate-protecting regulations. A liberal may be similarly motivated to discount science pertaining to the toxicity of teen pornography exposure, the benefits of marriage versus personal freedom, or the

innovations incentivized by the free market. Partisans on both sides may, thanks to the ever-present confirmation bias, selectively attend to data and voices that confirm their preexisting views.

Voices from the right and left both may dismiss scientific expertise, but on different issues:

- From some on the right: “Global warming is an expensive hoax!” (Donald Trump, 2014).

- From some on the left: GM foods “should not be released into the environment” (Greenpeace International, n.d.).

To explore this association of political views with acceptance of scientific conclusions, instructors can (a) mine survey data and (b) conduct a simple class experiment.

The National Opinion Research Center at the University of Chicago makes data from its periodic General Social Survey of adult Americans easily available. Visit this site and note that a click on the “search” box at the top will enable you or your students to search for variables of interest (as I did by entering the words “climate,” “gun,” “nuclear,” and “genetically modified”). Then, as a class demonstration or out-of-class exercise, you can investigate the following:

- Political views and climate-change concerns. Enter “tempgen1” in the row box. Enter “polviews” in the column box. Click “run table” and you will see that “temperature rise from climate change” is a big concern for liberals, but not for conservatives. (To see the complete question text, click on “output options” before running the table.)

- Political views and gun safety. Repeat the exercise, this time with “gunlaw” or “gunsales” in the row box.

- Political views and nuclear energy as dangerous. Enter “nukegen” in the row box.

- Political views and GM foods. Enter “eatGM” in the row box — and note that, unlike in the three previous analyses, there is actually, in this sample, little association between political views and attitudes toward GM foods.

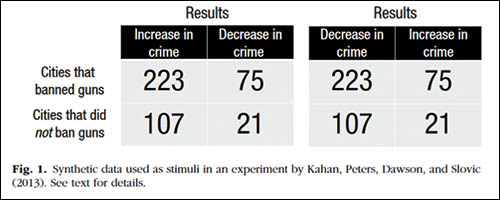

Lewandowsky and Oberauer also report on an experiment by Dan M. Kahan, APS Fellow Ellen M. Peters, Erica C. Dawson, and APS Fellow Paul Slovic (2013) that lends itself to a class replication. Show half the students the data on the left side of Figure 1 (see next page), from a hypothetical study of the outcomes in various cities of banning or not banning concealed handguns.

Ask each student: What result does the study support? Compared with cities without handgun bans, did cities that enacted a concealed handgun ban fare (a) better or (b) worse?

Then ask students whether they would describe themselves as tending to be generally more conservative or liberal.

Note that in the data on the left, the ban yielded a 3-to-1 increase versus decrease in crime, compared with a 5-to-1 increase without the ban (thus the ban was effective). Shown these data, most liberals recognized the result … but failed to draw the parallel conclusion (e.g., that the ban was ineffective) if shown the mirror-image data on the right. Conservative interpretations were reversed. Are your students’, too?

Given that people’s personal biases filter the science they

accept, how can we increase critical thinking, science literacy, and acceptance of evidence? In the case of climate change, we might

- relate the evidence to people’s preexisting values (e.g., clean energy boosts national

security by reducing dependence on foreign oil); - connect the topic with local concerns (e.g., the threat of drought matters to Texans, Californians, and Australians; the risk of flooding affects Floridians and the Dutch);

- frame the issues positively (e.g., “carbon offsets” are more palatable than “carbon taxes”; reducing carbon emissions is healthy, regardless of how a person feels about climate change); and

- make communications credible and memorable (e.g., use credible communicators, including conservative messengers to conservatives; underscore the broad-based scientific consensus; show climate-change doubters pictures of rising seas and extreme weather).

Distrust of science runs high among some who are religiously conservative (Pew, 2007). To increase enthusiasm for science among such students, I remind students of religion’s support for the founding of science, which was rooted in a spirit of humility that recognizes human fallibility. In that spirit, I suggest, let us welcome whatever insights science has to offer. As St. Paul advised, “Test everything; hold fast to what is good.”

References

Andrews, J. J., & Rapp, D. N. (2014). Partner characteristics and social contagion: Does group composition matter? Applied Cognitive Psychology, 28, 505–517.

Appel, M., & Richter, T. (2007). Persuasive effects of fictional narratives increase over time. Media Psychology, 10, 113–134.

Benjamin, A. S., Bjork, R. A., & Schwartz, B. L. (1998). The mismeasure of memory: When retrieval fluency is misleading as a metamnemonic index. Journal of Experimental Psychology: General, 127, 55–68.

Cook, J., Oreskes, N., Doran, P., Anderegg, W., Verheggen, B., Maibach, E., … Rice, K. (2016). Consensus on consensus: A synthesis of consensus estimates on human-caused global warming. Environmental Research Letters, 11, 048002.

Eslick, A. N., Fazio, L. K., & Marsh, E. J. (2011). Ironic effects of drawing attention to story errors. Memory, 19, 184–191.

Fazio, L. K., Barber, S. J., Rajaram, S., Ornstein, P. A., & Marsh, E. J. (2013). Creating illusions of knowledge: Learning errors that contradict prior knowledge. Journal of Experimental Psychology: General, 142, 1–5.

Fazio, L. K., & Marsh, E. J. (2008). Slowing presentation speed increases illusions of knowledge. Psychonomic Bulletin & Review, 15, 180–185.

Funk, C., & Rainie, L. (2015). Chapter 3: Attitudes and beliefs on science and technology topics. In “Public and Scientists’ Views on Science and Society,” Pew Research Center. Retrieved from http://www.pewinternet.org/2015/01/29/chapter-3-attitudes-and-beliefs-on-science-and-technology-topics/

Greenpeace International. (n.d.). Genetic engineering. Retrieved from http://www.greenpeace.org/international/en/campaigns/agriculture/problem/genetic-engineering/

Hinze, S. R., Slaten, D. G., Horton, W. S., Jenkins, R., & Rapp, D. N. (2014). Pilgrims sailing the Titanic: Plausibility effects on memory for facts and errors. Memory & Cognition, 42, 305–324.

Jacovina, M. E., Hinze, S. R., & Rapp, D. N. (2014). Fool me twice: The consequences of reading (and rereading) inaccurate information. Applied Cognitive Psychology, 28, 558–568.

Kahan, D. M., Peters, E., Dawson, E. C., & Slovic, P. (2013). Motivated numeracy and enlightened self-government (Tech. Rep. No. 307). Yale Law School, Public Law Working Paper No. 307.

Marsh, E. J., Meade, M. L., & Roediger, H. L. (2003). Learning facts from fiction. Journal of Memory and Language, 49, 519–536.

Pew Research Center (2007, December 18). Science in America: Religious belief and public attitudes. Retrieved from www.pewforum.org/2007/12/18/science-in-america-religious-belief-and-public-attitudes

Poland, G. A., & Jacobson, R. M. (2011). The age-old struggle against the antivaccinationists. New England Journal of Medicine, 364, 97–99.

Rapp, D. N. (2008). How do readers handle incorrect information during reading? Memory & Cognition, 36, 688–701.

Rapp, D. N., & Braasch, J. L. G. (Eds.). (2014). Processing inaccurate information: Theoretical and applied perspectives from cognitive science and the educational sciences. Cambridge, MA: MIT Press.

Rapp, D. N., Hinze, S. R., Kohlhepp, K., & Ryskin, R. A. (2014). Reducing reliance on inaccurate information. Memory & Cognition, 42, 11–26.

Rapp, D. N., Hinze, S. R., Slaten, D. G., & Horton, W. S. (2014). Amazing stories: Acquiring and avoiding inaccurate information from fiction. Discourse Processes, 1–2, 50–74.

Singer, M. (2013). Validation in reading comprehension. Current Directions in Psychological Science, 22, 361–366.

Sparks, J. R., & Rapp, D. N. (2011). Readers’ reliance on source credibility in the service of interference generation. Journal of Experimental Psychology: Learning, Memory, & Cognition, 37, 230–247.

Trump, D. J. [realDonaldTrump]. (2014, Jan. 28). Snowing in Texas and Louisiana, record setting freezing temperatures throughout the country and beyond. Global warming is an expensive hoax! [Tweet]. Retrieved from https://twitter.com/realDonaldTrump/status/428414113463955457

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.