Tess Neal Examines the Nature—and Limits—of Expertise

APS Fellow Tess Neal, a clinical and forensic psychologist at Iowa State University, is focused on understanding and improving human judgment processes, particularly among trained experts.

Your research focuses on human judgment processes as they intersect with the law and the nature and limits of expertise. What led to your scientific interest in these subjects?

Early in my graduate training, I had a stellar statistics teacher and mentor—Jamie DeCoster—who taught us about exploratory vs. confirmatory processes in science and why those distinctions matter for a credible body of knowledge. A few years later, while completing my predoctoral internship in 2011, I was fascinated by high-profile criticisms of science that burst onto the scene and built on the ideas I’d learned in grad school (e.g., Ioannidis, 2012; Simmons et al., 2011).

I also went to graduate school in a state with robust capital punishment. I saw psychologists I knew and highly respected take capital case referrals, believing they could divorce their personal opinions and beliefs about the death penalty from their professional work, such as when conducting competence for execution evaluations. But I wondered if their beliefs were correct. Is it really possible for experts to compartmentalize their personal emotions and cognitions as separate from their professional cognitions and judgments? I designed a dissertation project to answer this question, for which I was fortunate to secure funding from the National Science Foundation (NSF). I learned about the bias blind spot through this work (i.e., our tendency to more easily recognize others’ biases than our own; Pronin et al., 2002), as this pattern clearly emerged in my dissertation data in psychologists’ reasoning about themselves and their colleagues (Neal & Brodsky, 2016).

I saw connections between the problems in expert judgment among psychologists I was studying in my work and problems in expert judgment in scientists more broadly as the credibility revolution got underway and determined that I wanted to spend my career digging in to better understand the nature and limits of expertise—and what solutions we can identify for bolstering real expert performance. This fascination with expert abilities and performance, and the correspondence between those capacities and what is believed about experts’ capacities, has never gone away and continues to motivate the work I do. It also underpins my open science philosophy as incoming editor for the journal Psychology, Public Policy, and Law (see https://osf.io/vwgy3).

What are some highlights of your research? What has it shown?

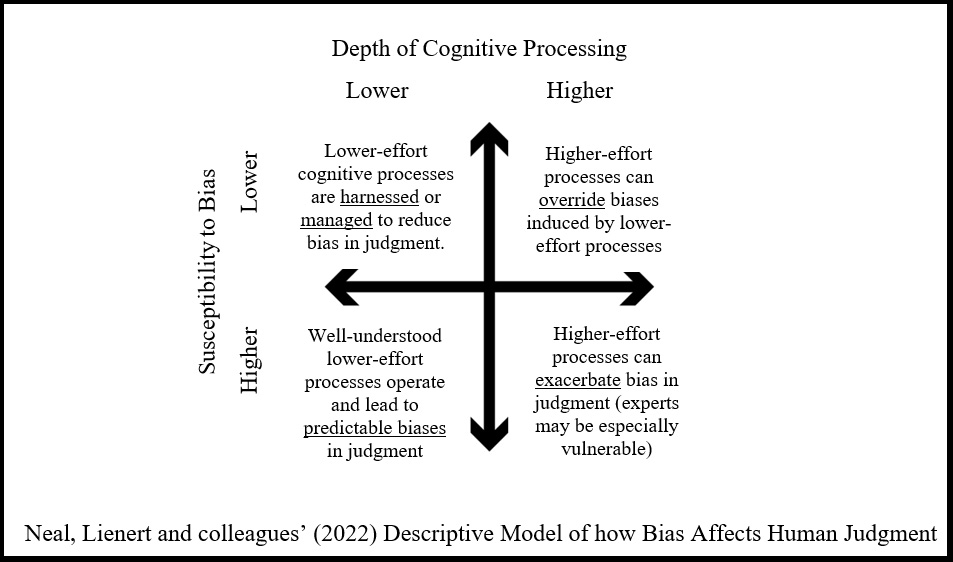

Do people (including experts themselves) have too much faith in the objectivity of expert judgment? People are vulnerable to a host of psychological biases that prejudice judgments and decisions. One might hope and expect experts to be more protected against these biases than the average person, yet the evidence supporting this expectation of expert objectivity is mixed at best. Some of my work wrestles with this conflicting evidence. For instance, a couple of years ago we published a general model of cognitive bias in human judgment, synthesizing a large body of evidence from diverse fields and theories about the circumstances under which people (including experts) are protected against bias and when they are particularly vulnerable to it (Neal, Lienert, et al., 2022). Our broad descriptive model outlines how cognitive biases impact human judgment across two interconnected continuous dimensions: depth of cognitive processing (ranging from low to high) and susceptibility to bias (ranging from low to high). The model is useful because it offers a framework for organized, testable predictions about specific manifestations of bias that are likely to emerge, as well as strategies to mitigate these biases, which could improve justice and fairness in society in general and the legal system, in particular.

Beyond theoretical integrative work like that above, I’ve also done experimental work on expert judgment. For example, we have investigated the degree to which experts view themselves as objective and the degree to which those perceptions are tethered to reality. Across multiple preregistered studies with different methods and in different decision domains, we find robust evidence of the following (see e.g., NSF #1655011):

- Experts can be vulnerable to predictable and systematic cognitive biases even in their professional judgments and decisions.

- Experts’ perceptions are generally not tethered well to the reality of their biases (i.e., experts exhibit a bias blind spot).

- Laypeople tend to believe experts are protected against bias (i.e., people have an illusion of expert objectivity). There are real consequences for expert bias blindness and people’s illusion of expert objectivity, with clear policy relevance.

I’ve also done a lot of work to better understand and quantify what psychologists do in their expert consultations with the legal system and have put effort toward solving some of the problems identified through that work. For example, a few years ago we published a big project reporting on the wide variety in psychometric quality of the assessment tools psychologists use in preparing their reports and testimony. This evidence aids judges in making legal decisions that profoundly affect people’s lives, such as child custody, insanity pleas, sentencing determinations, and even eligibility for capital punishment. We also found that courts are not calibrated to that variation in quality, and little quality screening occurs (Neal et al., 2019; see also the APS write-up here).

This work was covered by more than 500 newspapers and television news stations locally, nationally, and globally; in popular podcasts; in national and international news magazines, such as Wired; and on various popular blog sites. This work also led to a Fulbright Scholarship that brought me to the University of New South Wales in 2022 to study how country-level differences in evidence admissibility rules relate to the quality of expert evidence used in court. And we further built on that work with a special issue offering comprehensive, credible reviews of the psychometric evidence for, and legal status of, some of the most commonly used psychological assessment measures used in forensic evaluations (Neal , Sellbom, & de Ruiter, 2022).

Subsequently, I consulted with my Fulbright collaborator Kristy Martire on a major project for the Joint Federal/Provincial Mass Casualty Commission inquiry into Canada’s worst public mass shooting. Our role was to help the Commission understand whether a key piece of evidence for their inquiry (a psychological assessment of the perpetrator) was scientifically credible. For the first stage of our involvement, we insisted on being blind to details of the case, information about the perpetrator, and the assessment report itself for our initial analysis of what a scientifically credible report should look like. In our second phase, we applied those principles to evaluate the actual report in the case. We published part one of our analysis as an open-access journal article (Neal, Martire, et al., 2022) and made publicly available the items we developed to evaluate the quality of psychological assessment reports for others to use (https://osf.io/jhpqu).

What new or expanded research are you planning to pursue?

I’m working with Kristy Martire again and a great team of folks on expertise itself: reconsidering theories of expertise and refining how we can better identify it across domains and tasks (especially for those kinds of domains/tasks where expert performance is not visible). These issues are particularly important in law, where people’s lives and liberties are staked on whether we get these things right.

Related content: Psychological Assessment in Legal Contexts: Are Courts Keeping “Junk Science” Out of the Courtroom?

I’m also thinking a lot about how legal systems can make better use of expert evidence. Our legal system has evolved particular rules to try to foster good expert performance, but the rules are not working very well (see e.g., Neal et al., 2019). There are many reasons for this wicked problem, and innovative solutions are needed. I’m thinking a lot about how innovations in science—as the credibility revolution continues to evolve—could be useful for solving similar problems in law. I’m excited by the prospect of testing new solutions.

What is the biggest challenge you have encountered in your career?

I think in big, synergistic, and interdisciplinary ways—but that kind of approach has few power brokers championing it, and it can be tough as it tends to work against disciplinary status quo. This challenge is not just across disciplines, but even within psychology itself: my work weaves together theories and methods from the clinical, social, and cognitive traditions of psychological science. This kind of boundary-spanning is difficult to achieve because it doesn’t fit easily into existing categories and its value is not always recognized by scholars who fit more clearly within these boundaries.

What practical advice would you offer to an early career researcher who wants to be in your position someday, especially those interested in getting involved in human judgment processes, expert judgment, or legal psychology?

Realize that your life is happening now. Nurture your purpose both in terms of career and also personal life: Both have to be tended continuously for goals to be achieved. Set your goals and then pursue them diligently throughout your life, refining them with care as you go. Read voraciously. Be kind, curious, and open to the variety of opportunities and experiences that can lead you to your goals. Say “yes” to opportunities early on and embrace them (like joining research lab(s) as an undergrad even if the lab’s work isn’t exactly what you want to be doing). As you progress, get more discriminating about what opportunities you actually have time to embrace and that will keep you on your path.

Excel: Everything you do matters for the reputation you develop (do good work but don’t let perfectionism knock you off track). Recognize that there are many ways to do work in these areas. You can find good work without a graduate degree, and if you choose to pursue graduate education you can look broadly at what kinds of pathways might work for you (no one path is the way you must proceed toward an outcome like this one). Find research papers you love and figure out who wrote them: Seek to work with those people in graduate school, postdocs, jobs, and collaborations. Also read Paul Silvia’s book How to Write a Lot and check out the Faculty Success Program (ncfdd.org). Finally, try hard things but also know that failure is common (google “failure CV”). One mentor told me, “You have to play ball: You can’t hit a home run if you aren’t swinging the bat.” It’s excellent advice.

Feedback on this article? Email [email protected] or login to comment.

Ioannidis, J. P. A. (2012). Why science is not necessarily self-correcting. Perspectives on Psychological Science, 7(6), 645–654. https://doi.org/10.1177/1745691612464056

Neal, T. M. S., & Brodsky, S. L. (2016). Forensic psychologists’ perceptions of bias and potential correction strategies in forensic mental health evaluations. Psychology, Public Policy, and Law, 22(1), 58–76. https://doi.org/10.1037/law0000077

Neal, T. M. S., Lienert, P., Denne, E., & Singh, J. P. (2022). A general model of cognitive bias in human judgment and systematic review specific to forensic mental health. Law and Human Behavior, 46(2), 99–120. https://doi.org/10.1037/lhb0000482

Neal, T. M. S., Martire, K. A., Johan, J. L., Mathers, E. M., & Otto, R. K. (2022). The law meets psychological expertise: Eight best practices to improve forensic psychological assessment. Annual Review of Law and Social Science, 18, 169–192. https://doi.org/10.1146/annurev-lawsocsci-050420-010148

Neal, T. M. S., Sellbom, M., & de Ruiter, C. (2022). Personality assessment in legal contexts: Introduction to the special issue. Journal of Personality Assessment, 104(2), 127–136. https://doi.org/10.1080/00223891.2022.2033248

Neal, T. M. S., Slobogin, C., Saks, M. J., Faigman, D. L., & Geisinger, K. F. (2019). Psychological assessments in legal contexts: Are courts keeping “junk science” out of the courtroom? Psychological Science in the Public Interest, 20(3), 135–164. https://doi.org/10.1177/1529100619888860

Pronin, E., Lin, D. Y., & Ross, L. (2002). The bias blind spot: Perceptions of bias in self versus others. Personality and Social Psychology Bulletin, 28(3), 369–381. https://doi.org/10.1177/0146167202286008

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011) False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359–1366. https://doi.org/10.1177/0956797611417632

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.