Featured

How the Classics Changed Research Ethics

Some of history’s most controversial psychology studies helped drive extensive protections for human research participants. Some say those reforms went too far.

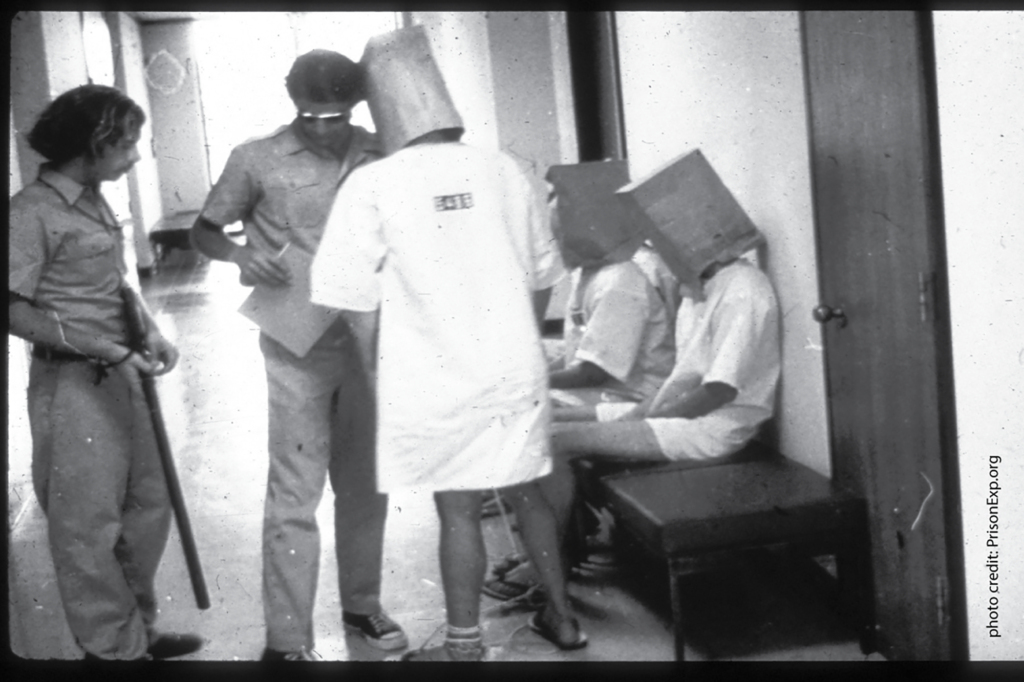

Photo above: In 1971, APS Fellow Philip Zimbardo halted his classic prison simulation at Stanford after volunteer “guards” became abusive to the “prisoners,” famously leading one prisoner into a fit of sobbing. Photo credit: PrisonExp.org

Nearly 60 years have passed since Stanley Milgram’s infamous “shock box” study sparked an international focus on ethics in psychological research. Countless historians and psychology instructors assert that Milgram’s experiments—along with studies like the Robbers Cave and Stanford prison experiments—could never occur today; ethics gatekeepers would swiftly bar such studies from proceeding, recognizing the potential harms to the participants.

But the reforms that followed some of the 20th century’s most alarming biomedical and behavioral studies have overreached, many social and behavioral scientists complain. Studies that pose no peril to participants confront the same standards as experimental drug treatments or surgeries, they contend. The institutional review boards (IRBs) charged with protecting research participants fail to understand minimal risk, they say. Researchers complain they waste time addressing IRB concerns that have nothing to do with participant safety.

Several factors contribute to this conflict, ethicists say. Researchers and IRBs operate in a climate of misunderstanding, confusing regulations, and a systemic lack of ethics training, said APS Fellow Celia Fisher, a Fordham University professor and research ethicist, in an interview with the Observer.

“In my view, IRBs are trying to do their best and investigators are trying to do their best,” Fisher said. “It’s more that we really have to enhance communication and training on both sides.”

‘Sins’ from the past

Modern human-subjects protections date back to the 1947 Nuremberg Code, the response to Nazi medical experiments on concentration-camp internees. Those ethical principles, which no nation or organization has officially accepted as law or official ethics guidelines, emphasized that a study’s benefits should outweigh the risks and that human subjects should be fully informed about the research and participate voluntarily.

See the 2014 Observer cover story by APS Fellow Carol A. Tavris, “Teaching Contentious Classics,” for more about these controversial studies and how to discuss them with students.

But the discovery of U.S.-government-sponsored research abuses, including the Tuskegee syphilis experiment on African American men and radiation experiments on humans, accelerated regulatory initiatives. The abuses investigators uncovered in the 1970s, 80s, and 90s—decades after the experiments had occurred—heightened policymakers’ concerns “about what else might still be going on,” George Mason University historian Zachary M. Schrag explained in an interview. These concerns generated restrictions not only on biomedical research but on social and behavioral studies that pose a minute risk of harm.

“The sins of researchers from the 1940s led to new regulations in the 1990s, even though it was not at all clear that those kinds of activities were still going on in any way,” said Schrag, who chronicled the rise of IRBs in his book Ethical Imperialism: Institutional Review Boards and the Social Sciences, 1965–2009.

Accompanying the medical research scandals were controversial psychological studies that provided fodder for textbooks, historical tomes, and movies.

- In the early 1950s, social psychologist Muzafer Sherif and his colleagues used a Boy Scout camp called Robbers Cave to study intergroup hostility. They randomly assigned preadolescent boys to one of two groups and concocted a series of competitive activities that quickly sparked conflict. They later set up a situation that compelled the boys to overcome their differences and work together. The study provided insights into prejudice and conflict resolution but generated criticism because the children weren’t told they were part of an experiment.

- In 1961, Milgram began his studies on obedience to authority by directing participants to administer increasing levels of electric shock to another person (a confederate). To Milgram’s surprise, more than 65% of the participants delivered the full voltage of shock (which unbeknownst to them was fake), even though many were distressed about doing so. Milgram was widely criticized for the manipulation and deception he employed to carry out his experiments.

- In 1971, APS Fellow Philip Zimbardo halted his classic prison simulation at Stanford after volunteer “guards” became abusive to the “prisoners,” famously leading one prisoner into a fit of sobbing.

Western policymakers created a variety of safeguards in the wake of these psychological studies and other medical research. Among them was the Declaration of Helsinki, an ethical guide for human-subjects research developed by the Europe-based World Medical Association. The U.S. Congress passed the National Research Act of 1974, which created a commission to oversee participant protections in biomedical and behavioral research. And in the 90s, federal agencies adopted the Federal Policy for the Protection of Human Subjects (better known as the Common Rule), a code of ethics applied to any government-funded research. IRBs review studies through the lens of the Common Rule. After that, social science research, including studies in social psychology, anthropology, sociology, and political science, began facing widespread institutional review (Schrag, 2010).

Sailing Through Review

Psychological scientists and other researchers who have served on institutional review boards provide these tips to help researchers get their studies reviewed swiftly.

- Determine whether your study qualifies for minimal-risk exemption from review. Online tools are even in development to help researchers self-determine exempt status (Ben-Shahar, 2019; Schneider & McClutcheon, 2018).

- If you’re not clear about your exemption, research the regulations to understand how they apply to your planned study. Show you’ve done your homework and have developed a protocol that is safe for your participants.

- Consult with stakeholders. Look for advocacy groups and representatives from the population you plan to study. Ask them what they regard as fair compensation for participation. Get their feedback about your questionnaires and consent forms to make sure they’re understandable. These steps help you better show your IRB that the population you’re studying will find the protections adequate (Fisher, 2022).

- Speak to IRB members or staff before submitting the protocol. Ask them their specific concerns about your study, and get guidance on writing up the protocol to address those concerns. Also ask them about expected turnaround times so you can plan your submission in time to meet any deadlines associated with your study (e.g., grant application deadlines).

References

Ben-Shahar, O. (2019, December 2). Reforming the IRB in experimental fashion. The Regulatory Review. University of Pennsylvania. https://www.theregreview.org/2019/12/02/ben-shahar-reforming-irb-experimental-fashion/

Fisher, C. B. (2022). Decoding the ethics code: A practical guide for psychologists (5th ed.). Sage Publications.

Schneider, S. L. & McCutcheon, J. A. (2018). Proof of concept: Use of a wizard for self-determination of IRB exempt status. Federal Demonstration Partnership. http://thefdp.org/default/assets/File/Documents/wizard_pilot_final_rpt.pdf

Social scientists have long contended that the Common Rule was largely designed to protect participants in biomedical experiments—where scientists face the risk of inducing physical harm on subjects—but fits poorly with the other disciplines that fall within its reach.

“It’s not like the IRBs are trying to hinder research. It’s just that regulations continue to be written in the medical model without any specificity for social science research,” she explained.

The Common Rule was updated in 2018 to ease the level of institutional review for low-risk research techniques (e.g., surveys, educational tests, interviews) that are frequent tools in social and behavioral studies. A special committee of the National Research Council (NRC), chaired by APS Past President Susan Fiske, recommended many of those modifications. Fisher was involved in the NRC committee, along with APS Fellows Richard Nisbett (University of Michigan) and Felice J. Levine (American Educational Research Association), and clinical psychologist Melissa Abraham of Harvard University. But the Common Rule reforms have yet to fully expedite much of the research, partly because the review boards remain confused about exempt categories, Fisher said.

Interference or support?

That regulatory confusion has generated sour sentiments toward IRBs. For decades, many social and behavioral scientists have complained that IRBs effectively impede scientific progress through arbitrary questions and objections.

In a Perspectives on Psychological Science paper they co-authored, APS Fellows Stephen Ceci of Cornell University and Maggie Bruck of Johns Hopkins University discussed an IRB rejection of their plans for a study with 6- to 10-year-old participants. Ceci and Bruck planned to show the children videos depicting a fictional police officer engaging in suggestive questioning of a child.

“The IRB refused to approve the proposal because it was deemed unethical to show children public servants in a negative light,” they wrote, adding that the IRB held firm on its rejection despite government funders already having approved the study protocol (Ceci & Bruck, 2009).

Other scientists have complained the IRBs exceed their Common Rule authority by requiring review of studies that are not government funded. In 2011, psychological scientist Jin Li sued Brown University in federal court for barring her from using data she collected in a privately funded study on educational testing. Brown’s IRB objected to the fact that she paid her participants different amounts of compensation based on need. (A year later, the university settled the case with Li.)

In addition, IRBs often hover over minor aspects of a study that have no genuine relation to participant welfare, Ceci said in an email interview.

“You can have IRB approval and later decide to make a nominal change to the protocol (a frequent one is to add a new assistant to the project or to increase the sample size),” he wrote. “It can take over a month to get approval. In the meantime, nothing can move forward and the students sit around waiting.”

Not all researchers view institutional review as a roadblock. Psychological scientist Nathaniel Herr, who runs American University’s Interpersonal Emotion Lab and has served on the school’s IRB, says the board effectively collaborated with researchers to ensure the study designs were safe and that participant privacy was appropriately protected

“If the IRB that I operated on saw an issue, they shared suggestions we could make to overcome that issue,” Herr said. “It was about making the research go forward. I never saw a project get shut down. It might have required a significant change, but it was often about confidentiality and it’s something that helps everybody feel better about the fact we weren’t abusing our privilege as researchers. I really believe it [the review process] makes the projects better.”

Some universities—including Fordham University, Yale University, and The University of Chicago—even have social and behavioral research IRBs whose members include experts optimally equipped to judge the safety of a psychological study, Fisher noted.

Training gaps

Institutional review is beset by a lack of ethics training in research programs, Fisher believes. While students in professional psychology programs take accreditation-required ethics courses in their doctoral programs, psychologists in other fields have no such requirement. In these programs, ethics training is often limited to an online program that provides, at best, a perfunctory overview of federal regulations.

“It gives you the fundamental information, but it has nothing to do with our real-world deliberations about protecting participants,” she said.

Additionally, harm to a participant is difficult to predict. As sociologist Martin Tolich of University of Otago in New Zealand wrote, the Stanford prison study had been IRB-approved.

“Prediction of harm with any certainty is not necessarily possible, and should not be the aim of ethics review,” he argued. “A more measured goal is the minimization of risk, not its eradication” (Tolich, 2014).

Fisher notes that scientists aren’t trained to recognize and respond to adverse events when they occur during a study.

“To be trained in research ethics requires not just knowing you have to obtain informed consent,” she said. “It’s being able to apply ethical reasoning to each unique situation. If you don’t have the training to do that, then of course you’re just following the IRB rules, which are very impersonal and really out of sync with the true nature of what we’re doing.”

Researchers also raise concerns that, in many cases, the regulatory process harms vulnerable populations rather than safeguards them. Fisher and psychological scientist Brian Mustanski of University of Illinois at Chicago wrote in 2016, for example, that the review panels may be hindering HIV prevention strategies by requiring researchers to get parental consent before including gay and bisexual adolescents in their studies. Under that requirement, youth who are not out to their families get excluded. Boards apply those restrictions even in states permitting minors to get HIV testing and preventive medication without parental permission—and even though federal rules allow IRBs to waive parental consent in research settings (Mustanski & Fisher, 2016)

IRBs also place counterproductive safety limits on suicide and self-harm research, watching for any sign that a participant might need to be removed from a clinical study and hospitalized.

“The problem is we know that hospitalization is not the panacea,” Fisher said. “It stops suicidality for the moment, but actually the highest-risk period is 3 months after the first hospitalization for a suicide attempt. Some of the IRBs fail to consider that a non-hospitalization intervention that’s being tested is just as safe as hospitalization. It’s a difficult problem, and I don’t blame them. But if we have to take people out of a study as soon as they reach a certain level of suicidality, then we’ll never find effective treatment.”

Communication gaps

Supporters of the institutional review process say researchers tend to approach the IRB process too defensively, overlooking the board’s good intentions.

“Obtaining clarification or requesting further materials serve to verify that protections are in place,” a team of institutional reviewers wrote in an editorial for Psi Chi Journal of Psychological Research. “If researchers assume that IRBs are collaborators in the research process, then these requests can be seen as prompts rather than as admonitions” (Domenech Rodriguez et al., 2017).

Fisher agrees that researchers’ attitudes play a considerable role in the conflicts that arise over ethics review. She recommends researchers develop each protocol with review-board questions in mind (see sidebar).

“For many researchers, there’s a disdain for IRBs,” she said. “IRBs are trying their hardest. They don’t want to reject research. It’s just that they’re not informed. And sometimes if behavioral scientists or social scientists are disdainful of their IRBs, they’re not communicating with them.”

Some researchers are building evidence to help IRBs understand the level of risk associated with certain types of psychological studies.

- In a study involving more than 500 undergraduate students, for example, psychological scientists at the University of New Mexico found that the participants were less upset than expected by questionnaires about sex, trauma, and other sensitive topics. This finding, the researchers reported in Psychological Science, challenges the usual IRB assumption about the stress that surveys on sex and trauma might inflict on participants (Yeater et al., 2012).

- A study involving undergraduate women indicated that participants who had experienced child abuse, although more likely than their peers to report distress from recalling the past as part of a study, were also more likely to say that their involvement in the research helped them gain insight into themselves and hoped it would help others (Decker et al., 2011).

- A multidisciplinary team, including APS Fellow R. Michael Furr of Wake Forest University, found that adolescent psychiatric patients showed a drop in suicide ideation after being questioned regularly about their suicidal thoughts over the course of 2 years. This countered concerns that asking about suicidal ideation would trigger an increase in such thinking (Mathias et al., 2012).

- A meta-analysis of more than 70 participant samples—totaling nearly 74,000 individuals—indicated that people may experience only moderate distress when discussing past traumas in research studies. They also generally might find their participation to be a positive experience, according to the findings (Jaffe et al., 2015).

The takeaways

So, are the historians correct? Would any of these classic experiments survive IRB scrutiny today?

Reexaminations of those studies make the question arguably moot. Recent revelations about some of these studies suggest that scientific integrity concerns may taint the legacy of those findings as much as their impact on participants did (Le Texier, 2019, Resnick, 2018; Perry, 2018).

Also, not every aspect of the controversial classics is taboo in today’s regulatory environment. Scientists have won IRB approval to conceptually replicate both the Milgram and Stanford prison experiments (Burger, 2009; Reicher & Haslam, 2006). They simply modified the protocols to avert any potential harm to the participants. (Scholars, including Zimbardo himself, have questioned the robustness of those replication findings [Elms, 2009; Miller, 2009; Zimbardo, 2006].)

Many scholars believe there are clear and valuable lessons from the classic experiments. Milgram’s work, for instance, can inject clarity into pressing societal issues such as political polarization and police brutality. Ethics training and monitoring simply need to include those lessons learned, they say.

“We should absolutely be talking about what Milgram did right, what he did wrong,” Schrag said. “We can talk about what we can learn from that experience and how we might answer important questions while respecting the rights of volunteers who participate in psychological experiments.”

Feedback on this article? Email [email protected] or login to comment.

References

Burger, J. M. (2009). Replicating Milgram: Would people still obey today? American Psychologist, 64(1), 1–11. https://doi.org/10.1037/a0010932

Ceci, S. J. & Bruck, M. (2009). Do IRBs pass the minimal harm test? Perspectives on Psychological Science, 4(1), 28–29. https://doi.org/10.1111/j.1745-6924.2009.01084.x

Decker, S. E., Naugle, A. E., Carter-Visscher, R., Bell, K., & Seifer, A. (2011). Ethical issues in research on sensitive topics: Participants’ experiences of stress and benefit. Journal of Empirical Research on Human Research Ethics: An International Journal, 6(3), 55–64. https://doi.org/10.1525/jer.2011.6.3.55

Domenech Rodriguez, M. M., Corralejo, S. M., Vouvalis, N., & Mirly, A. K. (2017). Institutional review board: Ally not adversary. Psi Chi Journal of Psychological Research, 22(2), 76–84. https://doi.org/10.24839/2325-7342.JN22.2.76

Elms, A. C. (2009). Obedience lite. American Psychologist, 64(1), 32–36. https://doi.org/10.1037/a0014473

Fisher, C. B., True, G., Alexander, L., & Fried, A. L. (2009). Measures of mentoring, department climate, and graduate student preparedness in the responsible conduct of psychological research. Ethics & Behavior, 19(3), 227–252. https://doi.org/10.1080/10508420902886726

Jaffe, A. E., DiLillo, D., Hoffman, L., Haikalis, M., & Dykstra, R. E. (2015). Does it hurt to ask? A meta-analysis of participant reactions to trauma research. Clinical Psychology Review, 40, 40–56. https://doi.org/10.1016/j.cpr.2015.05.004

Le Texier, T. (2019). Debunking the Stanford Prison experiment. American Psychologist, 74(7), 823–839. http://dx.doi.org/10.1037/amp0000401

Mathias, C. W., Furr, R. M., Sheftall, A. H., Hill-Kapturczak, N., Crum, P., & Dougherty, D. M. (2012). What’s the harm in asking about suicide ideation? Suicide and Life-Threatening Behavior, 42(3), 341–351. https://doi.org/10.1111/j.1943-278X.2012.0095.x

Miller, A. G. (2009). Reflections on “Replicating Milgram” (Burger, 2009). American Psychologist, 64(1), 20–27. https://doi.org/10.1037/a0014407

Mustanski, B., & Fisher, C. B. (2016). HIV rates are increasing in gay/bisexual teens: IRB barriers to research must be resolved to bend the curve. American Journal of Preventive Medicine, 51(2), 249–252. https://doi.org/10.1016/j.amepre.2016.02.026

Perry, G. (2018). The lost boys: Inside Muzafer Sherif’s Robbers Cave experiment. Scribe Publications.

Reicher, S. & Haslam, S. A. (2006). Rethinking the psychology of tyranny: The BBC prison study. British Journal of Social Psychology, 45, 1–40. https://doi.org/10.1348/014466605X48998

Resnick, B. (2018, June 13). The Stanford prison experiment was massively influential. We just learned it was a fraud. Vox. https://www.vox.com/2018/6/13/17449118/stanford-prison-experiment-fraud-psychology-replication

Schrag, Z. M. (2010). Ethical imperialism: Institutional review boards and the social sciences, 1965–2009. Johns Hopkins University Press.

Tolich, M. (2014). What can Milgram and Zimbardo teach ethics committees and qualitative researchers about minimal harm? Research Ethics, 10(2), 86–96. https://doi.org/10.1177/1747016114523771

Yeater, E., Miller, G., Rinehart, J., & Nason, E. (2012). Trauma and sex surveys meet minimal risk standards: Implications for institutional review boards. Psychological Science, 23(7), 780–787. https://doi.org/10.1177/0956797611435131

Zimbardo, P. G. (2006). On rethinking the psychology of tyranny: The BBC prison study. British Journal of Social Psychology, 45, 47–53. https://doi.org/10.1348/014466605X81720

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.