Academic Observer

What’s New at Psychological Science

Henry L. Roediger, III

AO: You’re about to wind up your second year as Editor in Chief of Psychological Science (PS). How has the experience of the last two years meshed with what you expected before you took the job?

EE: I had the good fortune to apprentice with Editor Emeritus Rob Kail beginning in the fall of 2011. Under his mentorship, I served as an Associate Editor in September and October, before switching to Senior Editor in November and December. The experience was enormously helpful in easing me into the Editor in Chief role, which started in January 2012. The experience also gave me an early indication of what was to come. Before I began training with Rob, I knew that PS received roughly 3,000 submissions annually, but that number was merely an abstraction. Now it’s very much a daily reality.

AO: Are there aspects of the job that have surprised you?

EE: PS is a far-flung enterprise: I live in Vancouver; Amy Drew, the Peer-Review Manager, is at APS headquarters in Washington, DC; Michele Nathan, the journal’s Managing Editor, works in Virginia; Kristen Medeiros checks in new submissions from her home in Hawaii; the production team at SAGE Publications is located near Los Angeles; and the journal’s 23 Senior and Associate Editors are based in nine countries on three continents. I’ve been pleasantly surprised how seamlessly the work of the journal flows, thanks to email, Skype, and other modern communication marvels that most people, including me, take for granted.

Eric Eich

AO: You seem to be enjoying yourself. What parts are the most fun?

EE: The first day on the job — January 1, 2012 — was memorable. At midnight my fiancée, Joanne Elliott, and I opened a bottle of champagne, fired up the MacBook, and waited for the first submission to arrive. We didn’t wait long: 15 minutes later, a paper came in from Beijing, followed by submissions from Amsterdam, Toronto, and other cities across North America before winding down in San Francisco. It was like watching New Year’s Day unfold across the globe from the perspective of some seriously dedicated folks — after all, this is supposed to be a holiday!

My more routine enjoyment comes from learning new things about psychology, especially in areas I wasn’t familiar with before, and sharing this knowledge with friends, colleagues, and students. Last year I developed a new course designed to teach advanced UBC psych majors to think and write like reviewers for PS. Working in small groups or individually, students read and critically reviewed a wide variety of in-press papers (manuscripts that have recently entered the production pipeline) and compared and contrasted their assessments with the actual reviews and decision letters written by referees and editors. It was a fun course to teach, and it gave the students a unique opportunity to hone their critical-reasoning abilities, strengthen their speaking and writing skills, and learn about leading-edge research in psychology. I look forward to teaching it again next year.

AO: The course sounds interesting, but what will you do if a student finds a fatal flaw in an in-press paper that the reviewers and editors (including yourself) missed?

EE: The student will get an automatic A for the course; that’s the easy part. As for the authors, we’ll cross that bridge together when we come to it.

AO: What are some of the changes you’ve instituted at Psychological Science since you began your term, and how have they been received?

EE: Back in January 2012, I added a new twist to the manuscript-submission process. When submitting manuscripts presenting original empirical work, authors are asked to briefly answer three questions: (1) What will the reader of this article learn about psychology that she or he did not know (or could not have known) before? (2) Why is that knowledge important for the field? (3) How are the claims made in the article justified by the methods used?

The insertion of “about psychology” and “for the field” in Questions 1 and 2, respectively, is meant to drive home the obvious but important point that PS is not a neuroscience journal or a social cognition journal or an emotion research journal or any other kind of specialty journal. It’s about psychology, which is why that word is embedded in the first two questions. It’s also about applying scientific methods to study behavior and experience, which motivates asking authors to explicitly tie their claims to their methods in Question 3.

I hoped that answers to the three questions would help authors put their best case forward and assist editors and reviewers in their deliberations. The feedback I’ve received has been favorable, so much so that, starting in 2014, editors and reviewers will be asked to evaluate submissions to the journal with the three questions in mind. Manuscripts that provide clear and compelling answers to the “What,” “Why,” and “How” questions will have the best prospects of being accepted for publication.

Since January 2012, we’ve made several other changes to the journal, for instance, separating Supplemental Material into reviewed versus unreviewed types and requiring authors to describe their contribution to the research or writing of a manuscript. But all were — dare I say the word — incremental tweaks to the system. Most of my time has been spent reviewing manuscripts (about 1,700 to date), which has given me a good sense of the journal’s strengths and shortcomings and helped me think through ways of improving our standards and practices.

AO: So, what changes are in store for the journal in 2014?

EE: Five major initiatives will be introduced, including the new manuscript-evaluation criteria we just discussed. Here are detailed descriptions of the remaining four:

Enhanced Reporting of Methods. Beginning January 2014, the manuscript submission portal will have a new section containing checkboxes for four Disclosure Statement items (very similar to existing items confirming that research meets ethical guidelines, etc.). Submitting authors must check each item in order for their manuscript to proceed to editorial evaluation; by doing so, authors actively declare that they have disclosed all of the required information for each study in the submitted manuscript. The Disclosure Statements cover four categories of important methodological details — Exclusions, Manipulations, Measures, and Sample Size — that have not required disclosure under current reporting standards (of PS in particular or psychology journals in general) but are essential for interpreting research findings.

This initiative is inspired in part by PsychDisclosure.org a recent initiative by Etienne LeBel and associates. Incorporating ideas raised in recent papers by Simmons, Nelson, and Simonsohn (2011, 2012) on false-positive psychology, LeBel et al. developed a four-item survey — the Disclosure Statement — and sent it to a random sample of authors of articles in several journals, including Psychological Science. For all studies in the article, authors were asked whether they reported (1) the total number of observations which were excluded, if any, and the criterion for doing so; (2) all tested experimental conditions, including failed manipulations; (3) all administered measures/items; and (4) how they determined their sample sizes and decided when to quit collecting data.

By LeBel et al.’s account, the primary benefits of PsychDisclosure.org include

increasing the information value of recently published articles to allow for more accurate interpretation of the reported findings…making visible what goes on under the radar of official publications, and…promoting sounder research practices by raising awareness regarding the ineffective and out-of-date reporting standards in our field with the hope that our website inspires journal editors to change editorial policies whereby the four categories of methodological details disclosed on this website become a required component of submitted manuscripts.

My colleagues and I agree that it would be a good thing to create a simple public norm for reporting what should be requisite information. Our sense is that Simmons, LeBel, and their colleagues are on the right track and that PS is well-positioned to promote the cause. The four Disclosure Statement items authors will have to complete during the submission process starting in January is one way to do that.

The checklist looks something like this:

For each study reported in your manuscript, check the boxes below to:

(1) Confirm that (a) the total number of excluded observations and (b) the reasons for doing so have been reported in the Method section(s) [ ]. If no observations were excluded, check here [ ].

(2) Confirm that all independent variables or manipulations, whether successful or failed, have been reported in the Method section(s) [ ]. If there were no independent variables or manipulations, as in the case of correlational research, check here [ ].

(3) Confirm that all dependent variables or measures that were analyzed for this article’s target research question have been reported in the Method section(s) [ ].

(4) Confirm (a) how sample size was determined and (b) your data-collection stopping rule have been reported in the Method section(s) [ ] and provide the page number(s) on which this information appears in your manuscript: ______________________________________________________________________

Several points merit attention. First, as shown above, the four-item Disclosure Statement applies only “to each study reported in your manuscript.” Originally, we considered adding a fifth item covering additional studies, including pilot work, that were not mentioned in the main text but that tested the same research question. However, feedback from several sources suggested that this would open a large can of worms. To paraphrase one commentator (Leif Nelson), it is all too easy for a researcher to think that an excluded study does not count. Furthermore, this actually puts a meaningful burden on the “full disclosure” researcher. The four items in the Disclosure Statement shown above are equally easy for everyone to answer; either that information is already in the manuscript or they can go back and add it. But a potential fifth item, covering additional studies, is different. The researcher who convinces himself or herself that one or more excluded studies don’t count has now saved the hours it might take to write them up for this query. File-drawering studies is damaging, but we are not convinced that this will solve that problem. A better solution involves preregistration of study methods and analyses — an approach we also take up.

Second, the focus of Item 3 is on dependent variables (DVs) or measures that were analyzed to address the target research question posed in the current submission. It is not uncommon for experimentalists to include one or more “exploratory” measures in a given study and to distinguish these from the “focal” DVs that represent the crux of the investigation. It’s OK for them not to disclose exploratory DVs assessed for separate research questions, but it’s not OK to withhold exploratory DVs assessed for the current article’s target research question. By the same token, correlational researchers in such diverse areas as behavioral genetics, personality theory, cultural psychology, and cognitive development may measure hundreds or even thousands of variables, most of which are set aside for other papers on separate issues involving different analyses. Item 3 is written to convey our trust in experimentalists and nonexperimentalists alike to report all analyzed measures that relate to the target research question at stake in this particular paper.

Third, in connection with Item 4, editors and reviewers will verify that the sample size and stopping rule information is reported for each study, given this is the only information that authors always need to report. For the three other items, there’s nothing for editors or reviewers to check because they will just be taking the authors’ word that they’ve disclosed all of the information. That is, editors and reviewers will take authors at their word that they have disclosed all excluded observations (if there were any), all independent variables/manipulations (again, if there were any), and all analyzed DVs/measures.

Lastly, I see the mandatory disclosure of Exclusions, Manipulations, Measures, and Sample Size as a means of giving readers a more accurate picture of the actual methods used to obtain the published findings. At the same time, I expect some readers will complain that the Disclosure Statement goes too far, while others will say it does not go far enough. Similarly, for every reader who believes the Disclosure Statement is too judgmental and inquisitive, another will think it puts too much trust in the better angels of the authors’ nature. I welcome suggestions from the readership for improving the disclosure process or any of the other initiatives sketched here.

Revised Word Limits. Research Articles and Research Reports are the journal’s principal platforms for the publication of original empirical research; together they account for the large majority of submissions to PS. Previously, Research Articles and Research Reports were limited to 4,000 and 2,500 words, respectively, which included all major sections of the main text (Introduction, Methods, Results, and Discussion) along with notes, acknowledgements, and appendices.

Going forward, the new limits on Research Articles and Research Reports will be 2,000 and 1,000 words, respectively. As before, notes, acknowledgements, and appendices count toward these limits, as do Introduction and Discussion material (including both interim Discussion sections and closing General Discussions). However, the Methods and Results sections of a manuscript are excluded from the word limits on these article types. The intent here is to afford authors the opportunity to report what they did, and what they found, in a manner that is clear, concise — and complete.

Earlier this year I ran a pilot project, described here, in which authors of recent submissions to the journal were asked to complete the 4-item Disclosure Statement and to estimate how long it took them to do so.

The mean and median completion times were 8 and 5 minutes, respectively, suggesting that the task is reasonably straightforward and practicable. That’s the good news. The bad news is that more than a few authors said that they would have reported one or more of the four major categories of information – Data Exclusions, Manipulations/IVs, Measures/DVs, and Sample Size – had it not been for the journal’s word limits.

In some cases, the authors could have moved some part of the main text to the Supplemental Material, thus making room for information bearing on the disclosure items, or they could have maxed out on the word limit (several of these submissions were 100+ words under the cap). More generally, and more importantly, it’s the authors’ responsibility to navigate the space limits that PS (or any journal) sets on submissions.

That said, I think there is merit in removing boundaries on Method and Results sections to enable and encourage authors to describe what they did, and what they found, in a manner that’s not only clear and concise, but complete as well. As recently as 2011, it was fine for us to say: “Psychological Science does not normally provide for the primary publication of extensive empirical studies with the full presentation of methods and data that is standard for the more specialized research journals.” That’s not OK today, given well-justified concerns — about replicability, p-hacking, HARKing, etc. — that have recently emerged in psychology and other sciences (including cancer research, pharmaceutics, decision sciences, and behavior genetics).

In the 2014 Submission Guidelines, I set the word limits on Research Articles and Research Reports at 2,000 and 1,000 words, respectively. As mentioned earlier, these limits include notes, acknowledgements, and appendices, as well as introductory and Discussion material. In principle, authors have no limits on their Method or Results sections. In practice, however, reviewers and editors will insist that authors be as succinct as possible. Concision can be achieved without sacrificing clarity or completeness. Authors should transfer nonessential, inside-baseball aspects of their Method and Results sections from the main text to the Supplemental Material.

Incidentally, the reason for focusing on Research Articles and Research Reports is that they account for 83% of all new submissions to PS. We’ll keep the 1,000-word, all-inclusive cap on Short Reports for the relatively rare cases in which a gifted writer can tell his or her story most effectively in this format.

Promoting Open Practices. PS is the launch vehicle for a program that seeks to incentivize open communication within the research community. Accepted PS articles may qualify for as many as three different badges to acknowledge that the authors have made their data, materials, or preregistered design and analysis plans publicly available. Badges are affixed to the articles along with links to the open data, materials, or preregistered design and analysis plans.

Despite the importance of open communication for scientific progress, present norms do not provide strong incentives for individual researchers to share data, materials, or their research process. In principle, journals could provide incentives for scientists to adopt open practices by acknowledging them in publication. In practice, the challenge is to establish which open practices should be acknowledged, what criteria must be met to earn acknowledgment, and how acknowledgement would be displayed within the journal.

Over the past several months, a group of 11 researchers led by Brian Nosek has been grappling with these and other issues. The result is an Open Practices document that proposes three forms of acknowledgment:

- Open Data badge, which is earned for making publicly available the digitally shareable data necessary to reproduce the reported result.

- Open Materials badge, which is earned for making publicly available the digitally shareable materials/methods necessary to reproduce the reported results.

- Preregistered badge, which is earned for having a preregistered design and analysis plan for the reported research and reporting results according to that plan. An analysis plan includes specification of the variables and the analyses that will be conducted. Preregistration is an effective countermeasure to the file-drawer problem alluded to earlier in connection with Disclosure Statements.

The criteria for each badge — and the processes by which they are awarded — are described in the Open Practices document along with answers to frequently asked questions. The document proposes two ways for certifying organizations to award badges for individual studies: disclosure or peer review. For now, PS will follow the simpler disclosure method.

Manuscripts accepted for publication on or after 1 January, 2014, are eligible to earn any or all of the three aforementioned badges. Journal staff will contact the corresponding authors with details on the badge-awarding process.

Psychological Science is the first journal to implement the badge program, so changes are sure to come as editors and authors gain experience with it in the field. Again, I welcome comments and suggestions for improvement from our community.

Embracing the New Statistics. Null hypothesis significance testing (NHST) has long been the mainstay method of analyzing data and drawing inferences in psychology and many other disciplines. This is despite the fact that, for nearly as long, researchers have recognized essential problems with NHST in general and with the dichotomous (significant vs. nonsignificant) thinking it engenders in particular.

The problems that pervade NHST are avoided by the “new statistics” — effect sizes, confidence intervals, and meta-analyses (see www.latrobe.edu.au/psy/research/projects/esci). In fact, the sixth edition of the American Psychological Association Publication Manual (APA, 2010) recommends that psychologists should, wherever possible, use estimation and base their interpretation of research results on point and interval estimates.

PS seconds this recommendation and seeks to aid researchers in shifting from reliance on NHST to estimation and other preferred techniques. To this end, we are publishing a tutorial by Geoff Cumming, a leader in the new statistics movement, that includes examples and references to books, articles, software, and online calculators that will aid authors in understanding and implementing estimation techniques in a wide range of research settings.

A new section on statistics has been added to the 2014 Submission Guidelines to align with Geoff Cumming’s tutorial on the advantages of analyzing data using estimation methods rather than relying on null hypothesis significance tests. The goal here is to strongly encourage authors in the estimation direction, not to require it. Most researchers imprinted on NHST, and it will take some time — and sustained nudging — for them (and us) to become comfortable and confident with new ways of interpreting and reporting their results.

AO: Do you worry about submitters abusing the new word limits by putting too much into their Method and Results sections?

EE: Several of my colleagues have strong reservations about my plan to remove word limits on Method and Results sections while capping the introductory and Discussion sections. The two chief concerns are that (a) authors may blend Discussion into Results and perhaps even pack the Method section with material that rightfully belongs in the introduction and (b) longer papers mean more work for editors and reviewers.

Such concerns are perfectly reasonable, and I share them. Following up on a lead from Ralph Adolphs, I contacted John Maunsell, Editor in Chief of the Journal of Neuroscience. As Ralph pointed out, that journal limits the size of Introduction and Discussion sections (at 500 and 1,500 words, respectively), but does not cap their Materials & Methods or Results sections.

Dr. Maunsell replied by saying:

I have not seen much evidence of authors packing the Methods or Results with Discussion material. I think that most authors realize that this is not an effective strategy for communicating discussion points. Authors might include a few sentences to place results in context when they are presented in the Results, but it has been rare to see excessive discussion. Arguably the situation might be different for [Psychological Science]. I’ve seen that some psychology journals allow articles with the format ‘…Methods-Results-Discussion-Methods-Results Discussion-…-General Discussion’. For this reason, your authors might be more tempted to include discussion in the results. In any case, I fully approve of your move to allow authors to provide a complete, self-contained description of their experiments, which cannot be done with limits on Methods and Results.

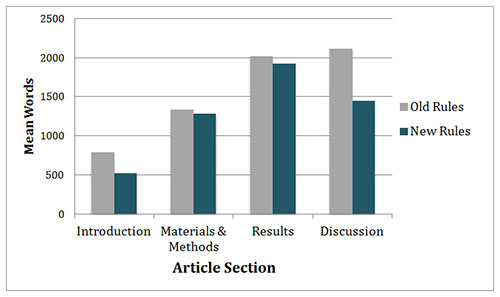

To dig into this issue a bit deeper, I estimated the word count on 48 randomly selected Journal of Neuroscience articles, half published in 1993 under the “old rules” (no limits on any section of the manuscript) and the other half published in 1996 under the “new rules” (with the aforementioned caps on Introduction and Discussion sections). The switch from old to new rules was announced in the February 1994 issue, and manuscripts submitted under the old rules were published throughout 1994 and into 1995; that’s why I picked 1996 to represent the new rules.

Figure 1

Figure 1 shows that, as expected, shifting from old to new rules produced a marked decrease in the length of Introductions (means of 791 versus 518 words) and Discussions (means of 2,114 versus 1,443 words). However, there was no appreciable change in the length of either Materials & Methods or Results. If Journal of Neuroscience authors can resist the temptation to mix Discussion with Results or Introduction with Methods, then Psychological Science authors probably can, too — given proper instruction and encouragement.

To this end, the 2014 Submission Guidelines state that authors should be able to provide a fulsome account of their methods and results within 2,500 words for Research Articles and 2,000 words for Research Reports. If authors meet these expectations, then with the new caps on Introduction and Discussion sections, Research Articles and Research Reports will each expand by about 500 words over their current limits. I would argue that the costs of these increases (to reviewers, editors, and journal-production staff) are offset by benefits to psychological science (the field and journal alike) in enhanced transparency and self-contained accounts. So, my recommendation is that we treat the new model as an experiment and revisit the matter in June or July 2014, when we can draw on our real-world experiences instead of what-if conjectures.

AO: Instead of uncapping the Methods and Results sections, why don’t you encourage authors to include more Supplemental Material?

EE: Several people suggested we increase the 1,000-word limit on supplemental online material, presumably of the Reviewed type (SOM-R), as an alternative to my plan to uncap the Method and Results sections. At first blush this seems like a good idea, but I think we should be wary. In a 2010 editorial, John Maunsell described the Journal of Neuroscience’s experience with ever-increasing SOMs, which reached the point where supplementary materials were nearly as large as the target articles themselves. Dr. Maunsell describes the reasons why the Society for Neuroscience decided to eliminate all SOM, including inadequate vetting, imprecise distinctions between core and supplemental material, and heavy workload for editors and reviewers (shifting to-be-reviewed material from the main text into a supplemental file doesn’t lighten anyone’s load).

I’m not advocating at this time that we drop SOM-R altogether as the Journal of Neuroscience did in November 2010. But I don’t think we should allow it to expand in order to accommodate research methods and findings that properly belong in the main article. Supplemental material is supposed to be just that — supplemental, not essential. Still, Dr. Maunsell’s experience suggests that the editors, reviewers, and authors of Journal of Neuroscience articles often had a tough time separating essential from nonessential material. Is our experience with PS any rosier? Probably not.

As an interesting aside, Dr. Maunsell told me:

When we stopped allowing authors to include supplemental material with their submissions in 2010, we were very worried that the results sections would grow greatly, with people cramming previously supplemental material into the results. However, that did not happen. We saw no appreciable increase in the length of our articles.

AO: Will manuscripts that are not eligible for one of the badges for so-called open practices be penalized in any way?

EE: Several editors raised concerns that nonexperimental studies of personality, culture, cognitive development, and many other areas could be disadvantaged relative to experimental studies when it comes to completing the disclosure statements or to earning badges for posting raw data or materials used in the published project.

Regarding disclosure statements, I appreciate that in many areas of research (including naturalistic and longitudinal studies), researchers seldom report all the data at once. As mentioned earlier, correlational researchers in such diverse areas as behavioral genetics, personality theory, cultural psychology, and cognitive development may measure hundreds or even thousands of variables, most of which are set aside for other papers on separate issues involving different analyses.

Under the current disclosure plan, researchers in any area of research (experimental, longitudinal, naturalistic, etc.) are required to report only the dependent variables that were analyzed for a particular article’s targeted research question. If I measure 1,000 DVs and analyze only 3 of them for a particular paper, I’m required to report the results for those 3 — no matter how the results turn out — but not the remaining 997. Thus, authors would never have to make an embarrassing statement such as: “We do not report two-thirds of the data that we collected.” On the other hand, were one to say, “We do not report two-thirds of the data we analyzed for this article’s targeted research question,” then one would have some explaining to do.

Regarding badges, most of the feedback was positive, but I understand and appreciate why some worry that the badges are geared toward experimental studies with familiar participant samples (e.g., university students or MTurk respondents). Consider, for example, the Open Data badge. Researchers who work with hard-to-reach groups (e.g., illegal immigrants) or clinical populations may be precluded from publicly posting their data owing to privacy, safety, or other concerns. Relatedly, some research projects require the cooperation of organizations (public school systems, for instance) that won’t allow publication of the data, and many funding agencies have their own reporting requirements and regulations (e.g., data collected in Country A must not be hosted on a server located in Country B).

The general point is this: If researchers aren’t interested in earning a given badge, for any reason, that’s fine. But if researchers can’t earn a given badge (for reasons such as those given above), we will offer them the opportunity to say so and to include their statement in the published paper.

AO: You’ve been an integral part of APS’s efforts to address issues of replicability and research practices. What role can and does the journal play in those efforts? Would Psychological Science publish failures to replicate if the methods were powerful?

EE: With a view to improving the publication standards and practices of PS, the five initiatives we’ve talked about are, at most, steps in the right direction, not an ideal end state. The issues of replicability and research practices are complex but not intractable if the community at large gets involved. Science organizations like APS and their journals can help, but I think real, lasting progress has to come from the ground up, not the top down.

You ask if PS would publish failures to replicate if the methods were powerful. We already do. Many of the Commentaries we publish are exchanges between researchers who failed to find an effect and those who originally succeeded. As Geoff Cumming writes in his forthcoming tutorial:

A single study is rarely, if ever, definitive; additional related evidence is required. This may come from a close replication, which, with meta-analysis, should give more precise estimates than the original study. A more general replication may increase precision, and also provide evidence of generality or robustness of the original finding. We need increased recognition of the value of both close and more general replications, and greater opportunities to report them.

AO: Thanks for taking the time to speak with me about changes at Psychological Science. These are exciting new developments, and I along with all members of APS, will look forward to the continued development of the journal. Thank you for all your hard work in editing it so well. This journal has been blessed with a succession of great editors, each making the journal better and helping to enhance its premier status within psychology.

AO Note: Additional details about the initiatives described above are available in the journal’s 2014 Submission Guidelines and a new Psychological Science editorial.

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.