Featured

Could Quantum Computing Revolutionize Our Study of Human Cognition?

Quantum computers may bring enormous advances to brain research

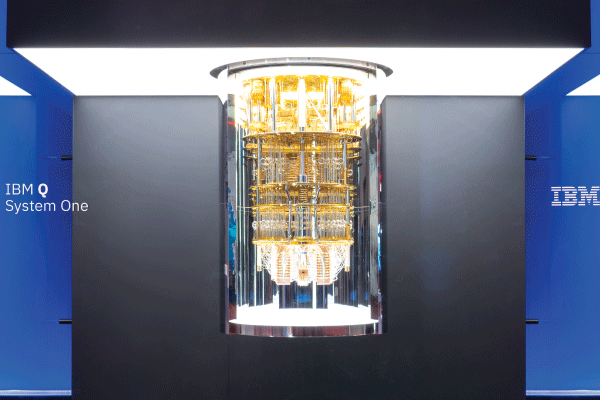

Image: IBM Q System One is the world’s first-ever circuit-based commercial quantum computer, introduced by IBM in January 2019.

Source: IBM.

Though a young and relatively underdeveloped technology, quantum computing may one day transform our understanding of complex natural systems like Earth’s climate, the nuclear reactions inside of stars, and human cognition.

Quantum computers achieve unprecedented calculating capabilities by harnessing the bizarre properties of matter on the subatomic scale, where electrons exist as clouds of probability and pairs of entangled particles can interact instantaneously, irrespective of their distance apart.

But how far are we from fully realizing this new class of computers? What are its prospects to advance the study of artificial intelligence? And, when, if ever, will psychological scientists be able to write programs that unlock some of the secrets of human cognition?

For now, a daunting list of technological innovations stand in the way of answering these questions. We can, however, take a glimpse at the current frontier of quantum computing and consider the technological gaps that remain.

“Nature isn’t classical, dammit, and if you want to make a simulation of Nature, you’d better make it quantum mechanical, and by golly it’s a wonderful problem, because it doesn’t look so easy.”

Richard Feynman, “Simulating Physics With Computers”

Science fiction to technological fact

From the Earth-orbiting satellites first proposed by Arthur C. Clarke to the remote-controlled mechanical arms envisioned by Robert Heinlein, science fiction has often presaged technological innovations.

A lesser known but equally influential example of speculative science fiction appeared in “When Harlie Was One,” written by David Gerrold and published in the early 1970s. Harlie (short for Human Analog Replication, Lethetic Intelligence Engine) was a newly created computer endowed with artificial intelligence that struggled with the same emotional and psychological dilemmas that many human adolescents face. To help guide it to adulthood, Harlie had the support of a psychologist named David Auberson, who tried to understand its immature yet phenomenally analytical mind.

This story about the intersection between human psychology and computer technology explored both the promise of artificial intelligence and the fundamental inability of biological and electronic brains to understand each other’s motivations and mental states.

Though we are likely centuries away from this kind of self-aware artificial intelligence, modern computers already apply so-called fuzzy logic (computing based on variables, not just zeros and ones) to solve a wide array of problems. They also use artificial intelligence algorithms to guide autonomous vehicles and neural networks to crudely mimic certain aspects of human cognition.

The challenge of comparing brains and computers

If you are looking for the most powerful graphics processing unit on the market today, you will find devices that contain about 54 billion transistors. Going a step further, if you had access to a supercomputer, you would have the power of 2.5 trillion transistors. These ginormous numbers, however, still pale in comparison to the biological wiring of the human brain, which contains, by one calculation, upwards of one thousand trillion (1015) synapses (AI Impacts, n.d.). This illustrates that for all our advances in computer hardware, we are still many orders of magnitude away from engineering the raw calculating power of the human brain.

In a special supplement to Nature, “The Four Biggest Challenges in Brain Simulation,” science writer Simon Makin explored this vast divide by outlining four hurdles to quantum modeling of the brain: scale, complexity, speed, and integration.

In comparing brains to computers, Makin also noted that speed means more than the raw processing power of a computer chip. Computer analogs must also take into account the amount of time it takes a brain to develop and learn new skills.

Researchers have taken some early steps toward bridging the differences in scale between synapses in the human brain and transistors in a classical computer by creating scaled down models of the brain. According to Makin, the most detailed simulation incorporating biophysical models was that of a partial rat brain, with 31,000 neurons connected by 36 million synapses.

Beyond the limitations of scale, there is a vast difference in complexity between the operations of classical computers and cognition in the human brain on the molecular level. Though research teams are creating databases of brain-cell types across species to study brain function on the cellular level, there are limits to the data researchers can collect, given that some data on the human brain cannot be gathered noninvasively.

In comparing brains to computers, Makin also noted that speed means more than the raw processing power of a computer chip. Computer analogs must also take into account the amount of time it takes a brain to develop and learn new skills. To overcome this temporal difference, computers would have to run faster than real time, which is not yet possible for complex simulations.

Finally, Makin addressed what he calls the integration problem. A top-down approach in which partial models of brain regions are combined into a brain-wide network needs to be combined with a bottom-up approach using simulations based on biophysical models. In the end, he noted that some aspects of mind “such as understanding, agency, and consciousness, might never be captured” by a computer model of the brain.

Bridging the divide between quantum computing

A quantum computer operates by controlling the behavior of fundamental subatomic particles like photons and electrons. But unlike larger agglomerations of matter—atoms, molecules, or people—subatomic particles are notoriously unruly. This is both a blessing and a curse when using them to make computations.

It is a blessing because it enables quantum computers to perform specific tasks at almost unimaginable speeds. For example, today’s basic quantum processors can manipulate vast amounts of incomplete or “fuzzy” data, making them ideal for factoring large numbers, which is a key step along the path to secure quantum cryptography.

It is a curse because the more powerful the quantum computer, the harder it is to control, program, and operate.

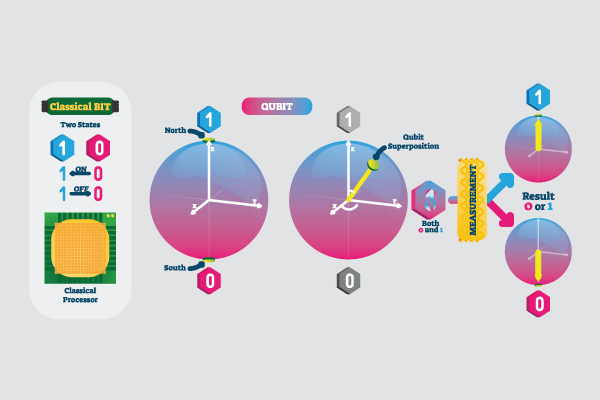

The fundamental difference between a classical computer and a quantum computer boils down to how they manipulate “bits,” or single pieces of data. For a classical computer, bits are just vast streams of zeros and ones, the binary code of machine language.

A quantum bit, however, is not so rigid. It can be a zero, a one, or an infinite range of possibilities in between. This is the quantum property known as superposition, made famous by Schrödinger’s thought experiment that rendered an unobserved cat both alive and dead at the same time.

The fluid nature of quantum bits, or qubits, as they are known, means they can be manipulated in ways that classical bits cannot. This is essential because simulating nature with classical computers is technically difficult from both a hardware and a software perspective, as you must account for all possible variables. Quantum computers, with their greater degrees of freedom, do not need to rely on such programming brute force; they simply mimic the system.

That’s not to say brute force isn’t necessary with quantum computers. It just comes at the front end.

To get fundamental particles to use their quantum properties, researchers must first cool them to just a fraction of a degree above absolute zero. (IBM’s Q System One quantum computer uses layer upon layer of refrigeration to reach such extreme temperatures. The cascading design has been dubbed “the Chandelier.”)

Next, engineers use magnetic fields to fix the qubits in their proper state and microwave pulses to either flip the state of each bit to zero or one or put it in superposition. Multiple pulses can also entangle two qubits, making them intrinsically bound.

But the challenges don’t stop there. Quantum researchers also need to find a way to program the system with sophisticated algorithms, keep the quantum state stable so it doesn’t lose information, and add sufficient qubits to mimic the system being studied, including neural networks.

“My own judgment is that this is a very intriguing direction to explore, but we are really only at the very beginning.”

Michael Hartmann (University of Erlangen–Nuremberg, Germany)

“Quantum neural networks have been explored to some degree, and while this is promising, a big challenge is to get classical data into a quantum computer,” said Michael Hartmann, a professor of theoretical physics at the University of Erlangen–Nuremberg in Germany. “That is, quantum computers can handle a huge amount of data—an amount that grows exponentially with the number of qubits. Hence one would think they are ideal for machine learning. Yet, to make use of the great capacities of quantum computers, you need to offer them the data as a quantum state. It is a huge effort to store such a large amount of classical data—and classical is the format of the data we have—into a quantum state.”

Also, unlike in classical computing, where it’s possible to simply add more bits to solve a problem, the hardware in quantum computing is not yet reliable enough to simply scale up. The more qubits you add, the more computing power you get, but you also introduce an increased chance of error into the system, among other structural problems.

“Hence, while better hardware is obviously needed in quantum computing and will remain a main goal for the next decade or more, there is also a need for concepts on how to best use ‘quantumness’ to model decision-making cognition,” said Hartmann. “My own judgment is that this is a very intriguing direction to explore, but we are really only at the very beginning.”

Feedback on this article? Email [email protected] or scroll down to comment.

Published in the print edition of the March/April issue with the headline “Quantum Leap.”

References

Feynman R. (1981) Simulating Physics With Computers. Keynote address delivered at the MIT Physics of Computers Conference. Published in Int. J. Theor. Phys. 21 (6/7) 1982

AI Impacts. (n.d.). Scale of the human brain. https://aiimpacts.org/scale-of-the-human-brain/

Consumer Technology Association. (2020, May 19). The potential of quantum computing. https://www.ces.tech/Articles/2020/The-Potential-of-Quantum-Computing.aspx

IBM. (n.d.). Introduction to quantum computing. https://www.ibm.com/quantum-computing/what-is-quantum-computing

Makin, S. (2019). The four biggest challenges in brain simulation. Nature, 571(S9). https://doi.org/10.1038/d41586-019-02209-z

Musser, G. (2018, January 29). Job one for quantum computers: Boost artificial intelligence. Quanta Magazine. https://www.quantamagazine.org/job-one-for-quantum-computers-boost-artificial-intelligence-20180129/

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.