Citizens Versus the Internet: Confronting Digital Challenges With Cognitive Tools

Psychological Science in the Public Interest (Volume 21, Number 3)

Read the Full Text (PDF, HTML)

The Internet is an indispensable and global virtual environment in which people constantly communicate, seek information, and make decisions. The architecture of this digital environment influences people’s interactions with it. Despite many advantages of this architecture, it is also responsible for some negative outcomes, such as the spread of misinformation, rising incivility in online interactions, the facilitation of ideological extremisms, and a decline in decision autonomy. Interventions that allow users to gain more control over their digital environments could counteract these negative consequences.

In this issue of Psychological Science in the Public Interest (Volume 21, Issue 3), Anastasia Kozyreva, Stephan Lewandowsky, and Ralph Hertwig identify the challenges that online environments pose to their users and explain how psychological science can inform interventions to decrease the negative consequences of Internet use.

Challenges in online environments

Online environments have introduced challenges that endanger individuals’ well-being and the functioning of society, from cyberbullying to widespread misinformation and opinion manipulation. Some of these challenges have to do with the differences between online and offline environments. In comparison to face-to-face communication and other offline environments, online environments provide much larger audiences, enabling users to broadcast messages to potentially millions of other users. In addition, there are fewer constraints on information proliferation and storage, changes occur rapidly (i.e., content can be added or deleted in seconds), there are fewer chances for social calibration, and people are more likely to feel disinhibited and self-disclose information, which can lead to incivility (e.g., posting inflammatory comments to deliberatively provoke and upset others). Other factors that may create negative outcomes for Internet users include the use of algorithms and choice architectures, designed to guide users’ behaviors, which are much easier to create in online environments than in offline environments.

Kozyreva and colleagues identify four major types of challenges that users encounter in online environments. First, persuasive and manipulative choice architectures—the strategic design of user interfaces that aim to steer users’ online behavior to benefit commercial interests (e.g., default settings that intrude on privacy)—challenge decision autonomy and informed choice. Second, AI-assisted information architectures—algorithmic tools that filter and mediate information online (e.g., targeted advertising, curation of news feeds on social media)—challenge both decision autonomy and control over the environment. Third, false and misleading information—online content that is not based on facts and misleads the public by instilling inaccurate beliefs and undermining trust in media or science—challenges the reasoning and civility of the public conversation. Fourth, distracting environments—digital environments designed to monopolize and/or direct attention to certain information or products—challenge attention and control.

The role of psychological science

Psychological science can help to inform policy interventions in the digital world. It is “crucial to ensure that psychological and behavioral sciences are employed not to manipulate users for financial gain but instead to empower the public to detect and resist manipulation,” Kozyreva and colleagues write.

Specifically, psychological and social sciences can provide behavioral and cognitive tools that contribute to interventions from other fields. The field of law and ethics, for example, can provide ethical guidelines and regulations. The education field can provide curricula for digital information literacy. And the technology field can provide automated detection of harmful materials and help to implement more ethically designed online choice architectures.

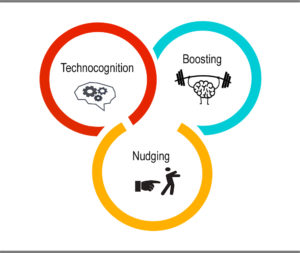

The authors identify three approaches informed by psychological science that may help to create a digital world with fewer negative consequences than the current digital world: Nudges aim to guide people’s behavior through the design of choice architectures (e.g., default privacy-respecting settings); technocognition aims to design technological solutions informed by psychological principles (e.g., making sharing offensive material more difficult); and boosts aim to improve people’s cognitive and motivational competencies.

Behavioral and cognitive tools

Focusing on boosts, Kozyreva and colleagues describe four tools that the designers of digital environments can implement to improve Internet users’ competencies.

- Self-nudging aims to enhance users’ control over their digital environments. A form of self-nudging arises from designing digital environments that foster users’ competencies to set their own defaults, adjust notifications, install ad blockers, and organize their online environments to avoid undesirable interruptions or triggers. Self-nudging leads Internet users to exercise their freedom and autonomy.

- Deliberate ignorance consists of deliberately not seeking or using information. It can be used as an information management tool (e.g., abstaining from seeking health information that might be inaccurate or even a conspiracy theory).

- Simple decision aids (e.g., the use of linguistic cues to distinguish between authentic and fictitious online product reviews or to determine the legitimacy of a page) can help people accurately evaluate the content they encounter online.

- Inoculation (or prebunking), much like a vaccine, uses small and controlled exposure to misinformation to boost people’s resilience to online misinformation and manipulation. Passive inoculation includes a warning about potential misinformation, the refutation of an anticipated argument in a weakened form (e.g., an example and explanation of a deceptive technique used to question a scientific consensus), and an exposure to the misinformation. Active inoculation involves a test (e.g., rating of news credibility), active learning (e.g., a game that presents disinformation strategies), and a postintervention test similar to the first test.

The use of these tools, informed by psychological science, may combat the negative consequences of digital environments and empower Internet users to have more control over their online environments. Kozyreva and colleagues suggest that Internet users and policymakers adopt these “simple rules for mindful Internet behavior that could become as routine as washing one’s hands or checking for cars before crossing the street.”

Recognizing the Role of Psychological Science in Improving Online Spaces

By Lisa K. Fazio, Vanderbilt University

Education and the improvement online spaces

In an accompanying commentary, Lisa K. Fazio (Vanderbilt University), a cognitive and developmental psychologist who studies memory and how people learn true and false information, prefers to “view psychology and an understanding of the human mind as a tool that can be used to better interventions of all types.” Fazio suggests that nudges, boosts, and technocognition might not be distinct categories with clear boundaries and that boosts might simply be a form of education. If one classifies boosts as a form of education, one can use psychological science to make both teaching and boosts more effective (e.g., spreading out the contact with misinformation, a strategy known as spacing, to make learning about it more efficient). Fazio agrees with Kozyreva and colleagues in calling for a broad definition of digital-media literacy and suggests that the best tips for media literacy are those that can be easily and quickly applied, for example, while scrolling through a social-media feed. She argues that implementing multiple small changes that may not be perfect on their own can build up to make online environments and their users less susceptible to disinformation.

See related news release.