Member Article

Citation-Based Indices of Scholarly Impact: Databases and Norms

Scholarly impact has long been an intriguing research topic (Nosek et al., 2010; Sternberg, 2003) as well as a crucial factor in making consequential decisions (e.g., hiring, tenure, promotion, research support, professional honors). As decision makers ramp up their reliance on objective measures (Abbott, Cyranoski, Jones, Maher, Schiermeier, & Van Noorden, 2010), quantifying scholarly impact effectively has never been more important. Conventional measures such as the number of articles published remain popular, but modern citation-based indices offer many advantages (Ruscio, Seaman, D’Oriano, Stremlo, & Mahalchik, 2012). We discuss two attractive indices, show that PsycINFO or Web of Science searches yield comparable results, and provide norms for psychological scientists on these indices.

The h and mq Indices

In a trailblazing paper, Hirsch (2005) introduced the h index, defined as the largest number h such that at least h articles are cited h times each. For example, suppose that Professor X has published 8 articles whose rank-ordered citation counts are {10, 7, 4, 2, 1, 1, 0, 0}. This yields h = 3 because there are 3 articles cited at least 3 times each, but not 4 articles cited at least 4 times each.

The h index is easy to calculate and, with a little practice, easy to understand. Whereas counting publications rewards productivity regardless of quality or impact, the h index rewards a balance between the quantity and quality of one’s work. Neither a large number of articles that are seldom cited nor a small number of highly-cited articles leads to a large h index. One must produce a steady stream of influential work to attain a high h index. Ruscio et al. (2012) evaluated the relative merits of 22 metrics, including several conventional measures, the h index, and many variants that followed its introduction. They found that the h index was highly robust to outliers (e.g., highly-cited papers or data retrieval/entry errors) and among a small handful of metrics that achieved the greatest empirical validity.

Hirsch (2005) also introduced the m quotient, or mq index, to adjust h in a way that takes into account career stage. Specifically, mq is calculated as h divided by publishing age, or the number of years since one’s earliest article. This adjustment to the h index can be especially useful when comparing individuals at different career stages. For example, Ruscio et al. (2012) found that scores on the mq index were highly similar for assistant, associate, and full professors.

Comparing Citation Databases

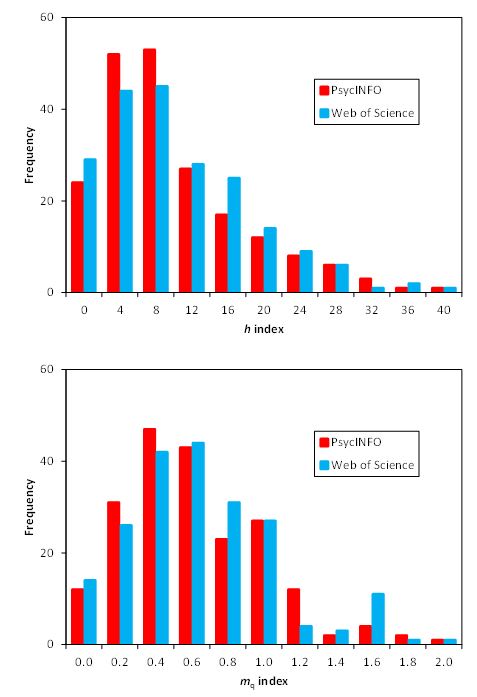

Figure 1. Score distributions for the h and mq indices for 204

university-affiliated psychology professors.

We collected a large sample of data to determine whether one obtains comparable h and mq when citations are retrieved using different databases. PsycINFO is well known to psychologists, and it indexes articles from over 2,450 journals; we restricted our searches to peer-reviewed journal articles. The Web of Science database included the Science Citation Index, Social Sciences Citation Index and the Arts and Humanities Citation Index. In total, this database indexes approximately 1,468 journals that span a broader range of disciplines. We did not perform searches using Google Scholar. Though this database usually returns substantially larger numbers of citations than PsycINFO or Web of Science, there are two major drawbacks. First, it includes target and citing works from less rigorously reviewed venues (e.g., conference presentations, unpublished manuscripts). Second, it yields a much larger proportion of erroneous citations than PsycINFO or Web of Science and an extremely laborious process is required to attempt to clean the data (Garcia-Perez, 2010).

The population for this study was professors affiliated with university-based doctoral programs in psychology in the United States. The National Research Council (NRC) listed 185 such universities (Goldberger, Maher, & Flattau, 1995). A random sample of universities was selected, and up to 20 tenured or tenure-track faculty were selected at random from each program. A number of precautions were taken to ensure that the citation data retrieved from both databases corresponded to the same target author at the same point in time; details are available on request.

For this sample of 204 university-affiliated psychology professors, there was a total of 6,130 articles cited 170,468 times in the PsycINFO database and 5,415 articles cited 170,688 times in the Web of Science database. Figure 1 shows that the distributions of scores on the h and mq indices were very similar. The same was true of their means: For the h index, MPsycINFO = 11.26, MWoS = 11.63 (t[203] = -1.67, p = .096); for the mq index, MPsycINFO = 0.71, MWoS = 0.73 (t[203] = -1.23, p = .218). Finally, across databases there was a strong correlation for h indices (r = .92) and for mq indices (r = .86). These results suggest that it should make little difference whether one calculates the h and mq indices using citations obtained by searching the PsycINFO or Web of Science database. Neither database puts individuals at a systematic advantage or disadvantage with respect to these indices of scholarly impact. However, to the extent that an individual publishes (and is cited) in more specialized social science journals, PsycINFO is likely to retrieve more of the relevant articles and citations. The reverse would be true for an individual who publishes (and is cited) in journals across a broader range of scientific disciplines.

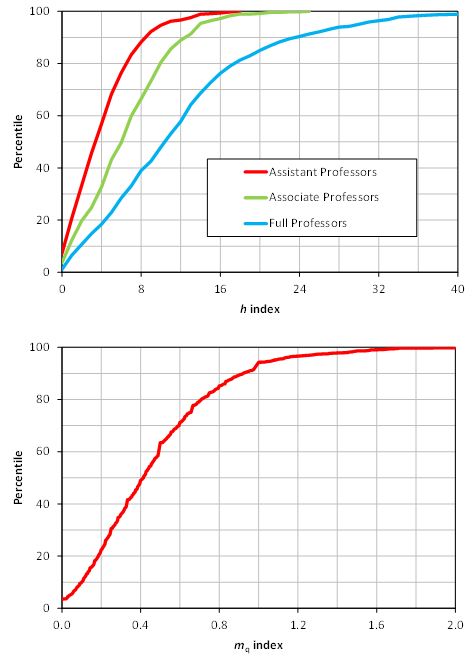

Figure 2. Percentiles for the h and mq indices for 1,750

university-affiliated psychology professors.

Norms for the h and mq Indices

An even larger sample of data, collected by Ruscio et al. (2012), allowed us to examine norms for psychological scientists on the h and mq indices. The population of target authors was the same as above, but 10 professors were randomly selected from each of the 175 programs on the NRC list that maintained a web site listing faculty. This sample included n = 450 assistant professors, n = 471 associate professors, and n = 829 full professors; adjunct, affiliated, and emeritus faculty were excluded, and distinguished professors were coded as full professors. For this sample of 1,750 university-affiliated psychology professors, there was a total of 48,692 articles cited 919,883 times in the PsycINFO database. Figure 2 shows the distributions of scores on the h and mq indices. For the h index, distributions are presented separately by rank. A single distribution is presented for the mq index because, as noted earlier, its adjustment for publishing age yielded highly similar scores across professor ranks.

These graphs provide one way to determine how the scholarly impact of a psychological scientist compares to a population of his or her university-affiliated peers. The results should generalize fairly well when citations are retrieved via Web of Science searches. These norms would not apply when using Google Scholar (Garcia-Perez, 2010). Until tools for searching and working with Google Scholar data are refined, we recommend relying on PsycINFO or Web of Science to calculate citation-based indices of scholarly impact for psychological scientists.

References

Abbott, A., Cyranoski, D., Jones, N., Maher, B., Schiermeier, Q., & Van Noorden, R. (2010, June 17). Do metrics matter? Nature, 465, 860-862. doi:10.1038/465860a

Garcia-Perez, M. A. (2010). Accuracy and completeness of publication and citation records in the Web of Science, PsycINFO, and Google Scholar: A case study for the computation of h indices in psychology. Journal of the American Society for Information Science and Technology, 61, 2070-2085. doi:10.1002/asi.21372

Goldberger, M. L., Maher, B. A., & Flattau, P. E. (Eds.) (1995). Research doctorate programs in the United States: Continuity and change. Washington, DC: National Academies Press.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences, 102, 16569-16572. doi: 10.1073/pnas.0507655102

Nosek, B. A., Graham, J., Lindner, N. M., Kesebir, S., Hawkins, C. B., Hahn, C., et al. (2010). Cumulative and career-stage citation impact of social-personality psychology programs and their members. Personality and Social Psychology Bulletin, 36, 1283-1300. doi:10.1177/0146167210378111

Ruscio, J., Seaman, F., D’Oriano, C., Stremlo, E., & Mahalchik, K. (2012). Measuring scholarly impact using modern citation-based indices. Measurement: Interdisciplinary Research and Perspectives, 10(3), 123-146. doi:10.1080/15366367.2012.711147

Sternberg, R. J. (Ed.) (2003). The anatomy of impact: What makes the great works of psychology great. Washington, DC: American Psychological Association.

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines.

Please login with your APS account to comment.